Now before we Begin this session, i want you to go through Previous Blogs of Prometheus to understand this ongoing Blog Better

Prometheus and Grafana using kube-prometheus-stack Helm Chart

Highly Recommend using repo here -Day16 to practise this project instead of code snippets, In case of confusion, Please do watch video that is explained in English, the code here in the blog is not changed to keep screenshots intact

You can use this Repo for the Project

git clone https://github.com/sagarkakkalasworld/Day16Now, let us get an overview of Prometheus of what we learnt in previous sessions in Basic Overview and keeping it short

Prometheus Basic Short Overview

Prometheus is more like timeseries database used for metrics , while its job is only for storage, we used tools like node exporter to scrape data, Prometheus rules to set rules and Alert Manager to send notifications

Before Beginning, let us understand why Prometheus rules and Alerts are needed?

Let us understand this with basic example of computers that we use in our day to day life

In case, Windows system that you are using is running too slow, our first instinct is to open Task Manager and check what is causing system to run slow

while you might observe your chrome browser or some Game that you have opened is causing slowness and you click on "End Task" so that you would get your CPU free from load it is taking and your usage would be now smooth

Same Concept applies here, let us assume we are running a website, and there was a Black Friday sale. Now our website has lot of customers, As a result , CPU load inside increases and as human, we can't monitor every time if CPU load is getting to certain threshold and only then we make a decision

Since we are using Prometheus, we already have the data required, this is where we use prometheus rules to give us alerts if CPU load is high, we can set that in the expression data which we will see later in manifest file used in Prometheus Rules

Now since we will have alerts there in Prometheus Tab, we cant keep checking the Alerts Tab in Prometheus UI every time when there were alerts, so we need a notification to our daily usage applications like slack, Teams, Gmail or any application, We use AlertManager for the same purpose

We can use Alert Manager to notify with same alerts to different Application or even customize to send specific group of alerts to specific application

Now let us understand Rules and Alert Manager better by applying its concepts

Configuring Prometheus Rules

Let us configure Prometheus Rules first, Before proceeding, make sure you install helm charts of Kube-Prometheus-stack and made set up ready, You can use this blog here to proceed on how to steps - click here

Once you have setup ready, now let us take a look on manifest file

You can use Github path of our Project here for an ease use - Prometheus Github GoldSite

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

name: react-nginx-cpu-alert

namespace: monitoring

labels:

release: prometheus

spec:

groups:

- name: react-nginx-rules

rules:

- alert: LowPodCPUUsage

expr: |

sum(rate(container_cpu_usage_seconds_total{namespace="react-nginx"}[5m])) * 100 < 70

for: 1m

labels:

severity: critical

annotations:

summary: "CPU usage is unusually low in the react-nginx namespace."

description: |

The CPU usage in the react-nginx namespace has been below 70% for the last 5 minutes.

Check for potential issues with workload scaling or pod health.

Impact of Labels in Prometheus Rule Manifest File

Now let us try to understand above manifest file before we apply rule, most important part that we need to take care here is labels part in metadata ,

As you can see, it has label as release:prometheus

By default this label would be whatever chart name we specify when we were installing helm chart

since we have used command like ""helm install prometheus prometheus-community/kube-prometheus-stack -n monitoring"" while installing this particular chart, it will default have these labels

This manifest file matches with rule selector file in manifest label

we can verify this with crd(custom resource definition), since we installed prometheus chart in monitoring namespace, let us check crd that come along with this chart

kubectl get crd -n monitoring | grep prometheusnow , from above screenshot CRD that we need that connects service monitors, rules and lot others was prometheuses.monitoring.coreos.com

let us check yaml file in this for labels

kubectl get prometheuses.monitoring.coreos.com -n monitoring -o yamlIn the same description, if you scroll down inside server , you would be able to find

ruleSelector:

matchLabels:

release: prometheus

This shows that our manifest file for rules and Prometheus gets attached using these labels

Explanation of Spec in Prometheus Manifest file

Now, if you observe spec file, it has groups, and you can keep n number of rules inside this group

Here, to make it simple, we used only on rule, alert: LowPodCPUUsage is the name of alerts and most important significance here in spec file is given to expr, which in short is Prometheus Query that we wanted to execute

while there are many queries here

sum(rate(container_cpu_usage_seconds_total{namespace="react-nginx"}[5m])) * 100 < 70

The above expression summarizes to if pods running inside react-nginx namespace has CPU less than 70%, notify me

Generally, we keep alerts like if CPU is less than 30% and more than 80% to take necessary decisions like to scale up or scale down application to save costs

here, since it is a demo, we kept condition less than 70 to notify for us to have clear picture on how these alerts works

and labels inside spec folder here signifies the importance that should be given for alert, annotations and summary for this alert can be customized based on the user requirement

Running Query through Prometheus UI

let us login in to Prometheus server using UI to execute the query

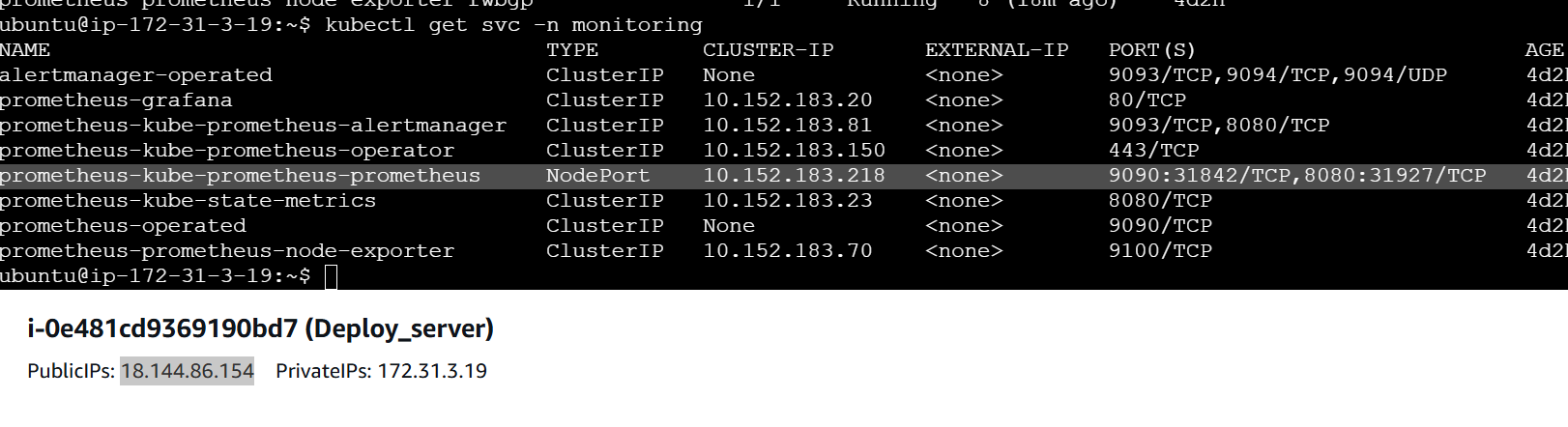

kubectl get svc -n monitoringAs seen from above screenshot, Prometheus run on NodePort,

By Default, Prometheus runs on ClusterIp, change it to NodePort to access the Prometheus UI

once logged in using http://AWS_EC2_Public_IP:NodePort, run the query

sum(rate(container_cpu_usage_seconds_total{namespace="react-nginx"}[5m])) * 100 < 70As you could see from above image, the value came something like 0.04, which is less than 70, since the condition is true, it will fire us an alert

Any Alert that is fired, you can find it in Alerts tab, and select the filter to Firing so that you would see only alerts that are firing

Also in the same UI, you can access Status > Rules , to check default rules configured by prometheus

and to check from our aws ec2 instance for default prometheus rules , use command

kubectl get prometheusrules -n monitoringConfiguring Prometheus Rule using CRD

apiVersion: monitoring.coreos.com/v1

kind: PrometheusRule

metadata:

name: react-nginx-cpu-alert

namespace: monitoring

labels:

release: prometheus

spec:

groups:

- name: react-nginx-rules

rules:

- alert: LowPodCPUUsage

expr: |

sum(rate(container_cpu_usage_seconds_total{namespace="react-nginx"}[5m])) * 100 < 70

for: 1m

labels:

severity: critical

annotations:

summary: "CPU usage is unusually low in the react-nginx namespace."

description: |

The CPU usage in the react-nginx namespace has been below 70% for the last 5 minutes.

Check for potential issues with workload scaling or pod health.save the above file in alertmanagerrules.yaml

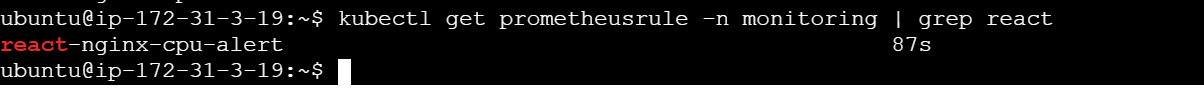

kubectl apply -f alertmanagerrules.yamlNow let us check, if we have the rules through server

kubectl get prometheusrule -n monitoring | grep reactAs you could see our rule is created

Let us also verify it using Prometheus UI

in the UI, press ctrl+F and type "react" to find our rule easily through UI

Now as you could see our rule is configured, let us check if we are getting alerts for this rule as condition we specified is true

As you can see from above screenshot, our alert is firing, This says that our Prometheus Rule is successfully configured

Configure Slack Channel Before Configuring Alert Manager

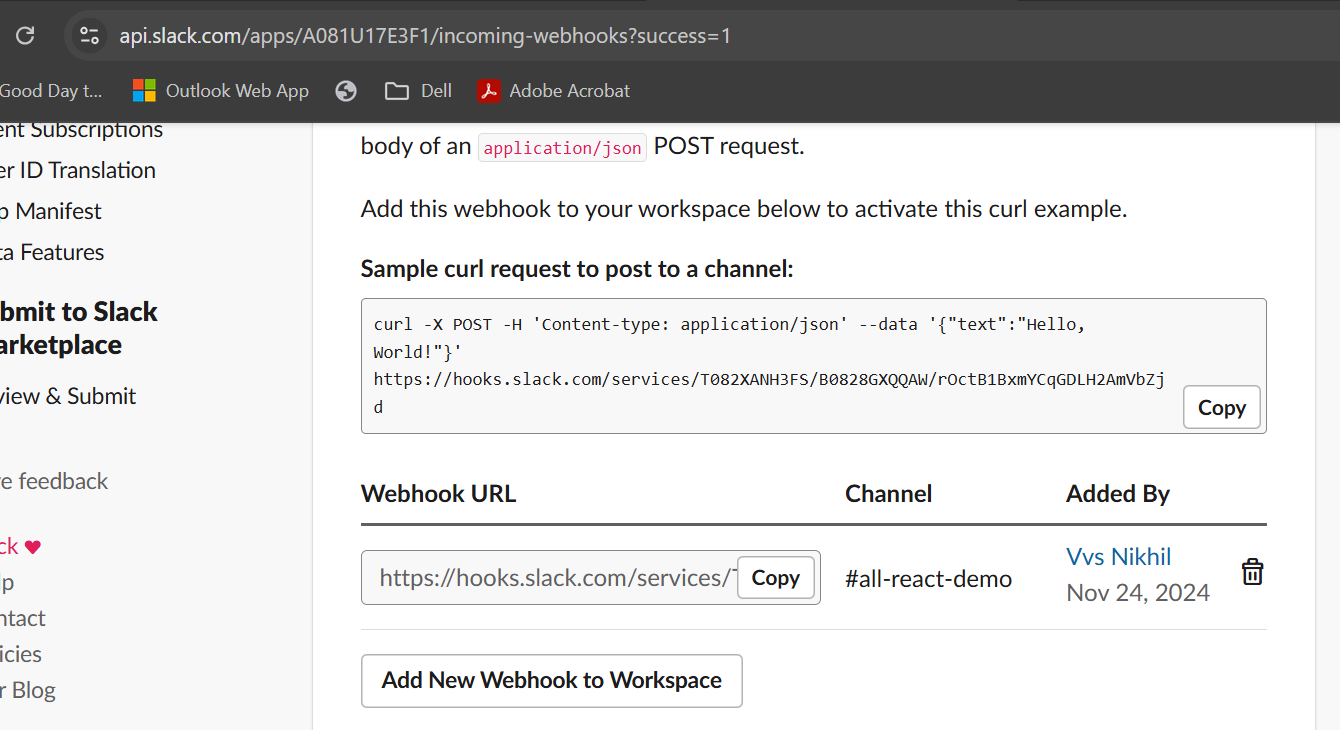

Now since we will be using slack here as our notification channel, Before configuring alert manager, Create a slack channel, Create a Slack app and and add app this to this slack channel,and get webhook

you can use this Blog here to know how- Slack Channel Configuration , while the blog here points out on Bot token , you can skip that part instead just select incoming webhook and turn on feature

and copy the webhook URL that was generated in the same page

copy the webhook as we will be using this is kubernetes secrets and this is important for us to send notifications from prometheus

Configuring Alert Manager

Now, we got our alerts in UI, but we as human, cannot check alerts every time in prometheus, we need to be notified when we get these alerts,this is where Alert Manager comes in rescue

We can use our day to day used applications to get notifications from alert manager like slack, Teams, Gmail , Discord...and we can name a lot

As discussed earlier, we will use slack channel here

Now, unlike prometheus rules or service monitors (previous session), Alert Manager does not have labels to get attached from CRD to prometheus

let us verify this

kubectl get crd -n monitoring | grep alerNow we need alertmanagers.monitoring.coreos.com to configure our alert manager

kubectl get alertmanagers.monitoring.coreos.com -n monitoring -o yamlNow from above screenshot, we can see that "alertmanagerConfigSelector: {}" does not have any labels to fix this we need to add labels, so that we can match alertmanager CRD to our manifest files that we create for alert manager

Adding Labels for Alert Manager

Since we want to add labels for alert manager and it is installed by helm chart from internet, we do not have values.yaml to change or manipulate files

for this, let us get the values file from installed helm chart

helm show values prometheus-community/kube-prometheus-stack -n monitoring > values.yamlNow let us manipulate the values.yaml that we appended now using above command

vi values.yamlIn vi editor, to search for alertmanagerConfigSelector by pressing inster+esc+: and then ./alertmanagerConfigSelector

Now, let us add changes in this way

alertmanagerConfigSelector:

matchLabels:

release: prometheusyou can add any label that you want but make sure same label to be added in your manifest file for alertmanager to attach your file through labels

and also another change to be made in the file

alertmanagerConfigMatcherStrategy:

type: None

Now once changes are done, let us save the file

Now we want helm to be upgraded with the values.yaml that we have upgraded, so for that we will use -f flag

helm upgrade prometheus prometheus-community/kube-prometheus-stack -n monitoring -f values.yamlonce it is deployed,let us verify our CRD if we have labels updated before proceeding to next step

kubectl get alertmanagers.monitoring.coreos.com -n monitoring -o yamlAs you could see from above screenshot, our alert manager is now ready with labels

Alternate way without disturbing helm chart

kubectl patch alertmanager prometheus-kube-prometheus-alertmanager \ -n monitoring \

--type merge \

-p '{"spec": {"alertmanagerConfigSelector": {"matchLabels": {"release": "prometheus"}}}}'

Configure Secret for Slack Webhook

Now before we proceed on alert-manager, let us create a secret for slack-webhook which we generated earlier

apiVersion: v1

kind: Secret

metadata:

name: slack-webhook

namespace: monitoring

labels:

release: prometheus

type: Opaque

stringData:

slack_webhook_url: 'https://hooks.slack.com/services/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'save it in alertmanagersecrets.yaml

kubectl apply -f alertmanagersecrets.yamlonce secret is configured verify with command

kubectl get secrets -n monitoring | grep slackmake sure this secret is in same namespace of Prometheus application, also point to be stressed is alert manager crd must also be in same namespace of Prometheus Application

And as you see, this secret has same label that we specified in alert manager

Understanding Manifest file of Alert Manager

apiVersion: monitoring.coreos.com/v1alpha1

kind: AlertmanagerConfig

metadata: name: react-demo

namespace: monitoring

labels:

release: prometheus

spec:

route:

groupBy: ['alertname']

groupWait: 30s

groupInterval: 5m

repeatInterval: 1h

continue: true

receiver: 'slack-notification'

routes:

- receiver: 'slack-notification'

continue: true

receivers:

- name: 'slack-notification'

slackConfigs:

- channel: '#all-react-demo'

apiURL:

name: slack-webhook

key: slack_webhook_url

title: "{{ range .Alerts }}{{ .Annotations.summary }}{{ end }}"

text: "{{ range .Alerts }}{{ .Annotations.description }}{{ end }}"As you could see from above file, it has same labels that we specified in alert manager config, and spec has options like "route" which has subsets like groupBy, this indicates that it can group all notifications with alertname, and this same route has receiver like "slack-notification",

here in this manifest file you can specify n number of routes ,and also to specify which route can go to which receiver, since we use only slack for demo here, receiver has only slack, you can add Teams, Gmail, Discord in this receiver section and customize in a way like CPU alerts must be routed to slack, Memory alerts must be routed to Mail, Disk alerts to be routed to Teams and Slack, here there is no limitations

and coming back to our receiver , it has channel_name which does not play much significant part here, the main part here plays for Webhook, here apiURL has name and Key, here apiURL searches for slack-webhook-url key which is present in our secret and also as you could see , we asked Title to have summary and Text to be description

we can even customize title and text using Go lang templates

As soon as alert is triggered, it send notifications to our slack channel

let us apply this manifest file

save the above manifest file in alertmanagernotifications.yaml

kubectl apply -f alertmanagernotifications.yamlTo verify on alertmanagerconfigs, let us run command

kubectl get alertmangerconfigs -n monitoringPost configuring alert-manager, you can verify alerts through Alerts tab in Prometheus UI, if there are any alerts, you would get slack notifications

As you could see from above, we received slack alert lowPodCPUUsage that we configured earlier

This concludes our blog.

🔹 Important NoteAlso, before proceeding to the next session, please do the homework to understand the session better - DevOps Homework

I Post most of my content in Telugu related to contrafactums(changing lyrics to original songs),fun vlogs, Travel stories and much more to explore, You can use this link as single point of link to access - Sagar Kakkala One Stop

🖊feedback, queries and suggestions about blog are welcome in the comments.

.png)

Comments

Post a Comment